For a while now robots have been back in the news with a vengeance, and almost on cue seem to have revived many of the nightmares that we might have thought had been locked up in the attic of the mind with all sorts of other stuff from the 1980’s, which it was hoped we would never need.

Big fears should probably only be tackled one at a time, so let’s leave aside for today the question of whether robots are likely to kill us, and focus on what should be an easier nut to crack and a less frightening nut at that; namely, whether we are in the process of automating our way out into a state of permanent, systemic unemployment.

Alas, even this seemingly less fraught question is no less difficult to answer. For like everything the issue seems to have given rise to two distinct sides neither of which seems to have a clear monopoly on the truth. Unlike elsewhere however, these two sides in the debate over “technological unemployment” usually split less over ideological grounds than on the basis of professional expertise. That is, those who dismiss the argument that advances in artificial intelligence and robotics have already, or are about to, displace the types of work now done by humans to the extent that we face a crisis of permanent underemployment and unemployment the likes of which have never been seen before tend to be economists. How such an optimistic bunch came to be known as dismissal scientists is beyond me- note how they are also on the optimistic side of the debate with environmentalists.

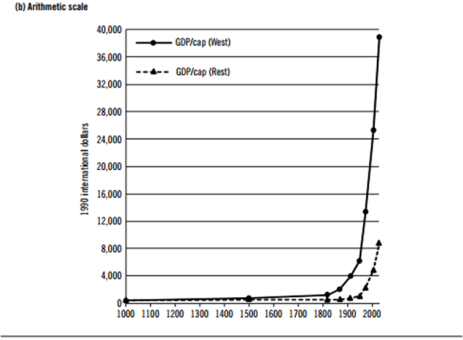

Economists are among the first to remind us that we’ve seen fears of looming robot induced unemployment before, whether those of Ned Ludd and his followers in the 19th century, or as close to us as the 1960s. The destruction of jobs has, in the past at least, been achieved through the kinds of transformation that created brand new forms of employment. In 1915 nearly 40% of Americans were agricultural laborers of some sort now that number hovers around 2 percent. These farmers weren’t replaced by “robots” but they certainly were replaced by machines.

Still we certainly don’t have a 40% unemployment rate. Rather, as the number of farm laborer positions declined they were replaced by jobs that didn’t even exist in 1915. The place these farmers have not gone, or where they probably would have gone in 1915 that wouldn’t be much of an option today is into manufacturing. For in that sector something very similar to the hollowing out of employment in agriculture has taken place with the decline in the percentage of laborers in manufacturing declining since 1915 from 25% to around 9% today. Here the workers really have been replaced by robots though just as much have job prospects on the shop floor declined because the jobs have been globalized. Again, even at the height of the recent financial crisis we haven’t seen 25% unemployment, at least not in the US.

Economists therefore continue to feel vindicated by history: any time machines have managed to supplement human labor we’ve been able to invent whole new sectors of employment where the displaced or their children have been able to find work. It seems we’ve got nothing to fear from the “rise of the robots.” Or do we?

Again setting aside the possibility that our mechanical servants will go all R.U.R on us, anyone who takes serious Ray Kurzweil’s timeline that by the 2020’s computers will match human intelligence and by 2045 exceed our intelligence a billionfold has to come to the conclusion that most jobs as we know them are toast. The problem here, and one that economists mostly fail to take into account, is that past technological revolutions ended up replacing human brawn and allowing workers to upscale into cognitive tasks. Human workers had somewhere to go. But a machine that did the same for tasks that require intelligence and that were indeed billions of times smarter than us would make human workers about as essential to the functioning of a company as Leaper ornament is to the functioning of a Jaguar.

Then again perhaps we shouldn’t take Kurzweil’s timeline all that seriously in the first place. Skepticism would seem to be in order because the Moore’s Law based exponential curve that is at the heat of Kurzweil’s predictions appears to have started to go all sigmoidal on us. That was the case made by John Markoff recently over at The Edge. In an interview about the future of Silicon Valley he said:

All the things that have been driving everything that I do, the kinds of technology that have emerged out of here that have changed the world, have ridden on the fact that the cost of computing doesn’t just fall, it falls at an accelerating rate. And guess what? In the last two years, the price of each transistor has stopped falling. That’s a profound moment.

Kurzweil argues that you have interlocked curves, so even after silicon tops out there’s going to be something else. Maybe he’s right, but right now that’s not what’s going on, so it unwinds a lot of the arguments about the future of computing and the impact of computing on society. If we are at a plateau, a lot of these things that we expect, and what’s become the ideology of Silicon Valley, doesn’t happen. It doesn’t happen the way we think it does. I see evidence of that slowdown everywhere. The belief system of Silicon Valley doesn’t take that into account.

Although Markoff admits there has been great progress in pattern recognition there has been nothing similar for the kinds of routine physical tasks found in much of low skilled/mobile forms of work. As evidence from the recent DARPA challenge if you want a job safe from robots choose something for a living that requires mobility and the performance of a variety of tasks- plumber, home health aide etc.

Markoff also sees job safety on the higher end of the pay scale in cognitive tasks computers seem far from being able to perform:

We haven’t made any breakthroughs in planning and thinking, so it’s not clear that you’ll be able to turn these machines loose in the environment to be waiters or flip hamburgers or do all the things that human beings do as quickly as we think. Also, in the United States the manufacturing economy has already left, by and large. Only 9 percent of the workers in the United States are involved in manufacturing.

The upshot of all this is that there’s less to be feared from technological unemployment than many think:

There is an argument that these machines are going to replace us, but I only think that’s relevant to you or me in the sense that it doesn’t matter if it doesn’t happen in our lifetime. The Kurzweil crowd argues this is happening faster and faster, and things are just running amok. In fact, things are slowing down. In 2045, it’s going to look more like it looks today than you think.

The problem, I think, with the case against technological unemployment made by many economists and someone like Markoff is that they seem to be taking on a rather weak and caricatured version of the argument. That at least was is the conclusion one comes to when taking into account what is perhaps the most reasoned and meticulous book to try to convince us that the boogeyman of robots stealing our jobs might have all been our imagination before, but that it is real indeed this time.

I won’t so much review the book I am referencing, Martin Ford’s Rise of the Robots: Technology and the Threat of a Jobless Future here as layout how he responds to the case from economics that we’ve been here before and have nothing to worry about or the observation of Markoff that because Moore’s Law has hit a wall (has it?), we need no longer worry so much about the transformative implications of embedding intelligence in silicon.

I’ll take the second one first. In terms of the idea that the end of Moore’s Law will derail tech innovation Ford makes a pretty good case that:

Even if the advance of computer hardware capability were to plateau, there would be a whole range of paths along which progress could continue. (71)

Continued progress in software and especially new types of (especially parallel) computer architecture will continue to be mined long after Moore’s Law has reached its apogee. Cloud computing should also imply that silicon needn’t compete with neurons at scale. You don’t have to fit the computational capacity of a human individual into a machine of roughly similar size but could tap into a much, much larger and more energy intensive supercomputer remotely that gives you human level capacities. Ultimate density has become less important.

What this means is that we should continue to see progress (and perhaps very rapid progress) in robotics and artificially intelligent agents. Given that we have what is often thought of as a 3 dimensional labor market comprised of agriculture, manufacturing and the service sector and the first two are already largely mechanized and automated the place where the next wave will fall hardest is in the service sector and the question becomes is will there be any place left for workers to go?

Ford makes a pretty good case that eventually we should be able to automate almost anything human beings do for all automation means is breaking a task down into a limited number of steps. And the examples he comes up with where we have already shown this is possible are both surprising and sometimes scary.

Perhaps 70 percent of financial trading on Wall Street is now done by trading algorithms (who don’t seem any less inclined to panic). Algorithms now perform legal research and compose orchestras and independently discover scientific theories. And those are the fancy robots. Most are like ATM machines where its is the customer who now does part of the labor involved in some task. Ford thinks the fast food industry is rife for innovation in this way where the customer designs their own food that some system of small robots then “builds.” Think of your own job. If you can describe it to someone in a discrete number of steps Ford thinks the robots are coming for you.

I was thankful that though himself a technologist, Ford placed technological unemployment in a broader context and sees it as part and parcel of trends after 1970 such as a greater share of GDP moving from labor and to capital, soaring inequality, stagnant wages for middle class workers, the decline of unions, and globalization. His solution to these problems is a guaranteed basic income, which he makes a humane and non-ideological argument for. Reminding those on the right who might the idea anathema that conservative heavyweights such Milton Friedman have argued in its favor.

The problem from my vantage point is not that Ford has failed to make a good case against those on the economists’ side of the issue who would accuse him of committing the Luddite fallacy, it’s that perhaps his case is both premature and not radical enough. It is premature in the sense that while all the other trends regarding rising inequality, the decline of unions etc are readily apparent in the statistics, technological unemployment is not.

Perhaps then technological unemployment is only small part of a much larger trend pushing us in the direction of the entrenchment and expansion of inequality and away from the type of middle class society established in the last century. The tech culture of Silicon Valley and the companies they have built are here a little bit like Burning Man an example of late capitalist culture at its seemingly most radical and imaginative that temporarily escapes rather than creates a really alternative and autonomous political and social space.

Perhaps the types of technological transformation already here and looming that Ford lays out could truly serve as the basis for a new form of political and economic order that served as an alternative to the inegalitarian turn, but he doesn’t explore them. Nor does he discuss the already present dark alternative to the kinds of socialism through AI we find in the “Minds” of Iain Banks, namely, the surveillance capitalism we have allowed to be built around us that now stands as a bulwark against preserving our humanity and prevent ourselves from becoming robots.