It’s hard to get your head around the idea of a humble prophet. Picturing Jeremiah screaming to the Israelites that the wrath of God is upon them and then adding “at least I think so, but I could be wrong…” or some utopian claiming the millenium is near, but then following it up with “then again this is just one man’s opinion…” would be the best kind of ridiculous- seemingly so out of character to be both shocking and refreshing.

William Gibson is a humble prophet.

In part this stems from his understanding of what science-fiction is for- not to predict the future, but to understand the present with right calls about things yet to happen likely just lucky guesses. Over the weekend I finished William Gibson’s novel The Peripheral, and I will take the humble man at his word as in: “The future is already here- it’s not just very evenly distributed.” As a reader I knew he wasn’t trying to make any definitive calls about the shape of tomorrow, he was trying to tell me about how he understands the state of our world right now, including the parts of it that might be important in the future. So let me try to reverse engineer that, to try and excavate the picture of our present in the ruins of the world of the tomorrow Gibson so brilliantly gave us with his gripping novel.

The Peripheral is a time-travel story, but a very peculiar one. In his imagined world we have gained the ability not to travel between past, present and future but to exchange information between different versions of the multiverse. You can’t reach into your own past, but you can reach into the past of an alternate universe that thereafter branches off from the particular version of the multiverse you inhabit. It’s a past that looks like your own history but isn’t.

The novel itself is the story of one of these encounters between “past” and “future.” The past in question is a world that is actually our imagined near future somewhere in the American South where the novel’s protagonist, Flynn, her brother Burton and his mostly veteran friends eek out their existence. (Even if I didn’t have a daughter I probably love Gibson’s use of strong female characters, but having two, I love that even more.) It’s a world that rang very true to me because it was a sort of dystopian extrapolation of the world where I both grew up and live now. A rural county where the economy is largely composed of “Hefty Mart” and people building drugs out of their homes.

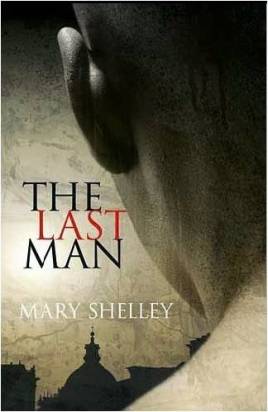

The farther future in the story is the world of London decades after a wrenching crisis known as the “jackpot”, much of whose devastation was brought about by global warming that went unchecked and resulted in the loss of billions of human lives and even greater destruction for the other species on earth. It’s a world of endemic inequality, celebrity culture and sycophants. And the major character from this world, Wilf Netherton, would have ended his days as a mere courtier to the wealthy had it not been for his confrontation with an alternate past.

So to start there are a few observations we can draw out from the novel about the present. The hollowing out of rural economies dominated by box stores, which I see all around me, the prevalence of meth labs as a keystone of this economy now only held up by the desperation of its people. Dito.

The other present Gibson is giving us some insight into is London where Russian oligarchs after the breakup of the Soviet Union established a kind of second Moscow. That’s a world that may fade now with the collapse of the Russian ruble, but the broader trend will likely remain in place- corrupt elites who have made their millions or billions by pilfering their home countries making their homes in, and ultimately shaping the fate, of the world’s greatest cities.

Both the near and far futures in Gibson’s novel are horribly corrupt. Local, state and even national politicians can not only be bought in Flynn’s America, their very jobs seem to be to put themselves on sale. London of the farther future is corrupt to the bone as well. Indeed, it’s hard to say that government exists at all there except as a conduit for corruption. The detective Ainsley Lowbeer, another major character in the novel, who plays the role of the law in London seems to not even be a private contractor, but someone pursuing justice on her own dime. We may not have this level of corruption today, but I have to admit it didn’t seem all that futuristic.

Inequality (both of wealth and power with little seeming distinction between the two) also unites these two worlds and our own. It’s an inequality that has an effect on privacy in that only those that have political influence have it. The novel hinges around Flynn being the sole (innocent) witness of a murder. There being no tape of the crime is something that leaves her incredulous, and, disturbingly enough, left me incredulous as well, until Lowbeer explains it to Flynn this way:

“Yours is a relatively evolved culture of mass surveillance,” Lowbeer said. “Ours, much more so. Mr Zubov’s house here, internally at least, is a rare exception. Not so much a matter of great expense as one of great influence.”

“What does that mean?” (Flynn)

“A matter of whom one knows,” said Lowbeer, “and of what they consider knowing you to be worth.” (223)

2014 saw the breaking open of the shell hiding the contours of the surveillance state we have allowed to be built around us in the wake of 9/11. Though how we didn’t realize this before Edward Snowden is beyond me. If I were a journalists looking for a story it would be some version of the surveillance-corruption-complex described by Gibson’s detective Lowbeer. That is, I would look for ways in which the blindness of the all seeing state (or even just the overwhelming surveillance powers of companies) was bought or gained from leveraging influence, or where its concentrated gaze was purchased for use as a weapon against rivals. In a world where one’s personal information can be ripped with simple hacks,or damaging correlations about anyone can be conjured out of thin air, no one is really safe. It merely an extrapolation of human nature that the asymmetries of power and wealth will ultimately decide who has privacy and who does not. Sadly, again, not all that futuristic.

In both the near and far futures of The Peripheral drones are ubiquitous. Flynn’s brother Burton himself was a former haptic drone pilot in the US military, and him and his buddies have surrounded themselves with all sorts of drones. In the far future drones are even more widespread and much smaller. Indeed, Flynn witnesses the aforementioned murder while standing in for Burton as a kind of drone piloting flyswatter keeping paparazzi drone flies away from the soon to be killed celebrity Aelita West.

That Flynn ended up a paparazzi flyswatter in an alternate future she thinks is a video game began in the most human of ways- Netherton trying to impress his girlfriend- Desarda West- Aelita’s sister. By far the coolest future-tech element of the book builds off of this, when Flynn goes from being a drone pilot to being the “soul” of a peripheral in order to be able to find Aelita’s murderer.

Peripherals, if I understand them, are quasi-biological forms of puppets. They can act intelligently on their own but nowhere near with the nuance and complexity of when a human being is directly controlling them through a brain-peripheral interface. Flynn becomes embodied in an alternative future by controlling the body of a peripheral while herself being in an alternative past. Leaves your head spinning? Don’t worry, Gibson is such a genius that in the novel itself is seems completely natural.

So Gibson is warning us about environmental destruction, inequality, corruption, and trying to imagine a world of ubiquitous drones and surveillance. All essential stuff for us to pay attention to and for which The Peripheral provides us with a kind of frame that might serve as a sort of protection against blinding continuing to head in directions we would rather not.

Yet the most important commentary on the present I gleaned from Gibson’s novel wasn’t these things, but what it said about a world where the distinction between the virtual and the real has disappeared where everything has become a sort of video-game.

In the novel, what this results in is a sort of digital imperialism and cruelty. Those in Gibson’s far future derisively call the alternative pasts they interfere in “stubs” though these are full worlds as much as their own with people in them who are just as real as us.

As Lowbeer tells Flynn:

Some persons or people unknown have since attempted to have you murdered, in your native continuum, presumably because they know you to be a witness. Shockingly, in my view, I am told that arranging your death would in no way constitute a crime here, as you are, according to current legal opinion, not considered to be real.(200)

The situation is actually much worse than that. As the character Ash explains to Netherton:

There were, for instance, Ash said, continua enthusiasts who’d been at it for several years longer than Lev, some of whom had conducted deliberate experiments on multiple continua, testing them sometimes to destruction, insofar as their human populations were concerned. One of these early enthusiasts, in Berlin, known to the community only as “Vespasian,” was a weapons fetishists, famously sadistic in his treatment of the inhabitants of his continua, whom he set against one another in grinding, interminable, essentially pointless combat, harvesting the weaponry evolved, though some too specialized to be of use outside whatever baroque scenario had produced it. (352)

Some may think this indicates Gibson believes we might ourselves be living in a matrix style simulation. In fact I think he’s actually trying to saying something about the way the world, beyond dispute, works right now, though we haven’t, perhaps, seen it all in one frame.

Our ability to use digital technology to interact globally is extremely dangerous unless we recognize that there are often real human beings behind the pixels. This is a problem for people who are engaged in military action, such as drone pilots, yes, but it goes well beyond that.

Take financial markets. Some of what Gibson is critiquing is the kinds of algo high-speed trading we’ve seen in recent years, and that played a role in the financial the onset of the financial crisis. Those playing with past continua in his near future are doing so in part to game the financial system there, which they can do not because they have a record of what financial markets in such continua will do, but because their computers are so much faster than those of the “past”. It’s a kind of AI neo-colonialism, itself a fascinating idea to follow up on, but I think the deeper moral lesson of The Peripheral for our own time lies in the fact that such actions, whether destabilizing the economies continua, or throwing them into wars as a sort of weapon’s development simulation, are done with impunity because the people in continua are consider nothing but points of data.

Today, with the click of a button, those who hold or manage large pools of wealth can ruin the lives of people on the other side of the globe. Criminals can essentially mug a million people with a keystroke. People can watch videos of stranger’s children and other people’s other loved ones being raped and murdered like they are playing a game in hell. I could go on, but shouldn’t have to.

One of the key, perhaps the key, way we might keep technology from facilitating this hell, from turning us into cold, heartless computers ourselves, is to remember that there are real flesh and blood human beings on the other side of what we do. We should be using our technology to find them and help them, or at least not to hurt them, rather than target them, or flip their entire world upside down without any recognition of their human reality because it some how benefits us. Much of the same technology that allows us to treat other human beings as bits, thankfully, gives us tools for doing the opposite as well, and unless we start using our technology in this more positive and humane way we really will end up in hell.

Gibson will have grocked the future and not just the present if we fail to address theses problems he has helped us (or me at least) to see anew. For if we fail to overcome these issues, it will mean that we will have continued forward into a very black continua of the multiverse, and turned Gibson into a dark prophet, though he had disclaimed the title.