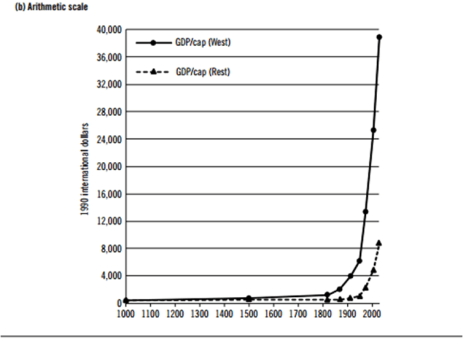

Back in the late winter I wrote a review of the biologist Edward O. Wilson’s grandiloquently mistitled tract- The Meaning of Human Existence. As far as visions of the future go Wilson’s was a real snoozer, although for that very reason it left little to be nervous about. The hope that he articulated in his book being that we somehow manage to keep humanity pretty much the same- genetically at least- “as a sacred trust”, in perpetuity. It’s a bio-conservatism that, on one level, I certainly understand, but one I also find incredibly unlikely given that the future consists of….well…. an awfully long stretch of time (that is as long as we’re wise enough or just plain lucky ). How in the world can we expect, especially in light of current advances in fields like genetics, neuroscience, artificial intelligence etc, that we can, or even should, keep humanity essentially unchanged not just now, but for 100 years, or 1000s year, 10,000s years, or even longer?

If Wilson is the 21st century’s prince of the dull future the philosopher David Roden should perhaps be crowned the king of weird one(s). Indeed, it may be that the primary point of his recent mind-bending book Posthuman Life:Philosophy at the Edge of the Human, is to make the case for the strange and unexpected. The Speculative Posthumanism (SP) he helps launch with this book a philosophy that grapples with the possibility that the future of our species and its descendents will be far weirder than we have so far allowed ourselves to imagine.

I suppose the best place to begin a proper discussion of Posthuman Life would be with explaining just exactly what Roden means by Speculative Posthumanism, something that (as John Dahaner has pointed out) Roden manages to uncover like a palimpsest by providing some very useful clarifications for often philosophically confused and conflated areas of speculation regarding humanity’s place in nature and its future.

Essentially Roden sees four domains of thought regarding humanism/posthumanism. There is Humanism of the old fashioned type that even absent some kind of spiritual dimension makes the claim that there is something special, cognitively, morally, etc that marks human beings off from the rest of nature.

Interestingly, Roden sees Transhumanism as merely an updating of this humanism- the expansion of its’ tool kit for perfecting humankind to include not just things like training and education but physical, cognitive, and moral enhancements made available by advances in medicine, genetics, bio-electronics and similar technologies.

Then there is Critical Posthumanism by which Roden means a move in Western philosophy apparent since the later half of the 20th century that seeks to challenge the anthropocentrism at the heart of Western thinking. The shining example of this move was the work of Descartes, which reduced animals to machines while treating the human intellect as mere “spirit” as embodied and tangible as a burnt offering to the gods. Critical Posthumanism, among whom one can count a number of deconstructionists, feminists, multicultural, animal rights, and environmentalists philosophers from the last century, aims to challenge the centrality of the subject and the discourses surrounding the idea of an observer located at some Archimedean point outside of nature and society.

Lastly, there is the philosophy Roden himself hopes to help create- Speculative Posthumanism the goal of which is to expand and explore the potential boundaries of what he calls the posthuman possibility space (PPS). It is a posthumanism that embraces the “weird” in the sense that it hopes, like critical posthumanism, to challenge the hold anthropocentrism has had on the way we think about possible manifestations of phenomenology, moral reasoning, and cognition. Yet unlike Critical Posthumanism, Speculative Posthumanism does not stop at scepticism but seeks to imagine, in so far as it is possible, what non-anthropocentric forms of phenomenology, moral reasoning, and cognition might actually look like. (21)

It is as a work of philosophical clarification that Posthuman Life succeeds best, though a close runner up would be the way Roden manages to explain and synthesize many of the major movements within philosophy in the modern period in a way that clearly connects them to what many see as upcoming challenges to traditional philosophical categories as a consequence of emerging technologies from machines that exhibit more reasoning, or the disappearance of the boundary between the human, the animal, and the machine, or even the erosion of human subjectivity and individuality themselves.

Roden challenges the notion that any potential moral agents of the future that can trace their line of descent back to humanity will be something like Kantian moral agents rather than agents possessing a moral orientation we simply cannot imagine. He also manages to point towards connections of the postmodern thrust of late 21st century philosophy which challenged the role of the self/subject and recent developments in neuroscience, including connections between philosophical phenomenology and the neuroscience of human perception that do something very similar to our conception of the self. Indeed, Posthuman Life eclipses similar efforts at synthesis and Roden excels at bringing to light potentially pregnant connections between thinkers as diverse as Andy Clark and Heidegger, Donna Haraway and Deleuze and Derrida along with non-philosophical figures like the novelist Philip K. Dick.

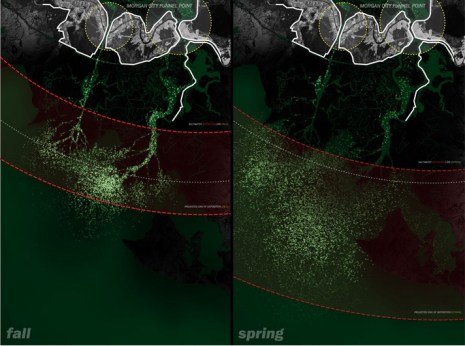

It is as a very consequence of his success at philosophical clarification that leads Roden across what I, at least, felt was a bridge (philosophically) too far. As posthumanist philosophers are well aware, the very notion of the “human” suffers a continuum problem. Unique to us alone, it is almost impossible to separate humanity from technology broadly defined and this is the case even if we go back to the very beginnings of the species where the technologies in question are the atul or the baby sling. We are in the words of Andy Clark “natural born cyborgs”. In addition to this is the fact that (like anything bound up with historical change) how a human being is defined is a moving target rather than a reflection of any unchanging essence.

How then can one declare any possible human future that emerges out of our continuing “technogenesis” “post” human, rather than just the latest iteration of what in fact is the very old story of the human “artificial ape”? And this status of mere continuation (rather than break with the past) would seem to hold in a philosophical sense even if whatever posthumans emerged bore no genetic and only a techno-historical relationship to biological humans. This somewhat different philosophical problem of clarification again emerges as the consequence of another continuum problem namely the fact that human beings are inseparable from the techno-historical world around them- what Roden brilliantly calls “the Wide Human” (WH).

It is largely out of the effort to find clear boundaries within this confusing continuum that leads Roden to postulate what he calls the “disconnection thesis”. According to this thesis an entity can only properly be said to be posthuman if it is no longer contained within the Wide Human. A “Wide Human descendent is a posthuman if and only if:”

- It has ceased to belong to WH (the Wide Human) as a result of technical alteration.

- Or is wide descendent of such a being. (outside WH) . (112)

Yet it isn’t clear, to me at least, why disconnection from the Wide Human is more likely to result in something more different from humanity and our civilization as they currently exist today than anything that could emerge out of, but still remain part of, the Wide Human itself. Roden turns to the idea of “assemblages” developed by Deleuze and Guattari in an attempt to conceptualize how such a disconnection might occur, but his idea is perhaps conceptually clearer if one comes at it from the perspective of the kinds of evolutionary drift that occurs when some set of creatures becomes isolated from another by having become an island.

As Darwin realized while on his journey to the Galapagos isolation can lead quite rapidly to wide differences between the isolated variant and its parent species. The problem when applying such isolation analogies to technological development is that unlike biological evolution (or technological development before the modern era), the evolution of technology is now globally distributed, rapid and continuous.

Something truly disruptive seems much more likely to emerge from within the Wide Human than from some separate entity or enclave- even one located far out in space. At the very least because the Wide Human possesses the kind of leverage that could turn something disruptive into something transformative to the extent it could be characterized as posthuman.

What I think we should look out for in terms of the kinds of weird divergence from current humanity that Roden is contemplating, and though he claims speculative posthumanism is not normative, is perhaps rooting for, is maybe something more akin to a phase change or the kinds of rapid evolutionary changes seen in events like the cambrian explosion or the opening up of whole new evolutionary theaters such as when life in the sea first moved unto the land than some sort of separation. It would be something like the singularity predicted by Vernor Vinge though might just as likely come from a direction completely unanticipated and cause a transformation that would make the world, from our current perspective, unrecognizable, and indeed, weird.

Still, what real posthuman weirdness would seem to require would be something clearly identified by Roden and not dependent, to my lights, on his disruption thesis being true. The same reality that would make whatever follows humanity truly weird would be that which allowed alien intelligence to be truly weird; namely, that the kinds of cognition, logic, mathematics, science found in our current civilization, or the kinds of biology and social organization we ourselves possess to all be contingent. What that would mean in essence was that there were a multitude of ways intelligence and technological civilizations might manifest themselves of which we were only a single type, and by no means the most interesting one. Life itself might be like that with the earthly variety and its conditions just one example of what is possible, or it might not.

The existence of alien intelligence and technology very different from our own means we are not in the grip of any deterministic developmental process and that alternative developmental paths are available. So far, we have no evidence one way or another, though unlike Kant who used aliens as a trope to defend a certain versions of what intelligence and morality means we might instead imagine both extraterrestrial and earthly alternatives to our own.

While I can certainly imagine what alternative, and from our view, weird forms of cognition might look like- for example the kinds of distributed intelligence found in a cephalopod or eusocial insect colony, it is much more difficult for me to conceive what morality and ethics might look like if divorced from our own peculiar hybrid of social existence and individual consciousness (the very features Wilson, perhaps rightfully, hopes we will preserve). For me at least one side of what Roden calls dark phenomenology is a much deeper shade of black.

What is especially difficult in this regard for me to imagine is how the kinds of openness to alternative developmental paths that Roden, at the very least, wants us to refrain from preemptively aborting is compatible with a host of other projects surrounding our relationship to emerging technology which I find extremely important: projects such as subjecting technology to stricter, democratically established ethical constraints, including engineering moral philosophy into machines themselves as the basis for ethical decision making autonomous from human beings. Nor is it clear what guidance Roden’s speculative posthumanism provides when it comes to the question of how to regulate against existential risks, dangers which our failure to tackle will foreclose not only a human future but very likely possibility of a posthuman future.

Roden seems to think the fact that there is no such thing as a human “essence” we should be free to engender whatever types of posthumans we want. As I see it this kind of ahistoricism is akin to a parent who refuses to use the lessons learned from a difficult youth to inform his own parenting. Despite the pessimism of some, humanity has actually made great moral strides over the arc of its history and should certainly use those lessons to inform whatever posthumans we chose to create.

One would think the types of posthumans whose creation we permit should be constrained by our experience of a world ill designed by the God of Job. How much suffering is truly necessary? Certainly less than sapient creatures currently experience and thus any posthumans should suffer less than ourselves. We must be alert to and take precautions to avoid the danger that posthuman weirdness will emerge from those areas of the Wide Human where the greatest resources are devoted- military or corporate competition- and for that reason- be terrifying.

Yet the fact that Roden has left one with questions should not subtract from what he has accomplished; namely he has provided us with a framework in which much of modern philosophy can be used to inform the unprecedented questions that are facing as a result of emerging technologies. Roden has also managed to put a very important bug in the ear of all those who would move too quick to prohibit technologies that have the potential to prove disruptive, or close the door to the majority of the hopefully very long future in front of us and our descendents- that in too great an effort to preserve the contingent reality of what we currently are we risk preventing the appearance of something infinitely more brilliant in our future.